Neural networks, particularly artificial neural networks (ANNs), are a foundational element of modern artificial intelligence and machine learning. They are algorithms inspired by the structure and function of the brain’s biological neural networks. The primary aim of a neural network is to recognize patterns, which makes them particularly effective for tasks such as classification, regression, and even generation of new data.

Basic Components:

- Neurons (Nodes): These are the basic units of a neural network. They receive input, process it, and produce an output. The processing typically involves a weighted sum of the inputs followed by the application of an activation function.

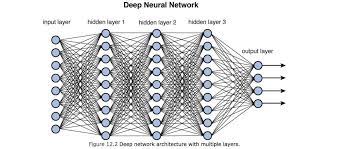

- Layers:

- Input Layer: Where the network begins, consisting of neurons that receive the input features.

- Hidden Layers: Layers between the input and output. These layers do the complex computations.

- Output Layer: The final layer that produces the network’s predictions or classifications.

- Weights & Biases: These are parameters of the network that are adjusted during training to minimize the prediction error.

- Activation Function: A mathematical function applied to a neuron’s output, introducing non-linearity into the model. Common activation functions include the sigmoid, tanh, ReLU (Rectified Linear Unit), and softmax.

Working Mechanism:

- Forward Propagation: Input data is passed through the network, layer by layer, until the output layer is reached. Each neuron processes the data, and the final output represents the network’s prediction.

- Backward Propagation (Training): The network’s prediction is compared to the true output, producing an error. This error is then propagated backward through the network, adjusting the weights using optimization techniques, often gradient descent.

- Iteration: Steps 1 and 2 are repeated multiple times (epochs) on the training data until the error converges to a minimal or acceptable value.

Learning: The network “learns” from data by adjusting its weights and biases in response to the error it produces on training examples. Over many iterations, these adjustments enable the network to approximate complex, non-linear functions.

Types of Neural Networks:

- Feedforward Neural Networks (FNN): The simplest type where data moves in one direction, from the input layer through hidden layers to the output layer.

- Convolutional Neural Networks (CNN): Designed for image data, they have convolutional layers that automatically and adaptively learn spatial hierarchies of features.

- Recurrent Neural Networks (RNN): Suitable for sequential data. They have loops that allow information to be passed from one step in the network to the next.

- Deep Neural Networks (DNN): Neural networks with a large number of hidden layers. “Deep learning” refers to the use of DNNs.

- Others: There are many other specialized architectures like LSTM (Long Short-Term Memory), GRU (Gated Recurrent Units), Transformer networks, etc.

Strengths:

- Ability to model non-linear relationships.

- Can automatically learn and improve from experience without being explicitly programmed.

- Highly versatile and can be applied to a wide range of tasks.

Limitations:

- Require large amounts of data for training.

- Computationally intensive.

- Can be seen as a “black box,” making them harder to interpret compared to simpler models.

In summary, neural networks are a class of models that have proven highly effective at many AI tasks, especially when there are complex, non-linear relationships in the data. Their biologically-inspired structure and adaptability make them a cornerstone of modern AI systems.